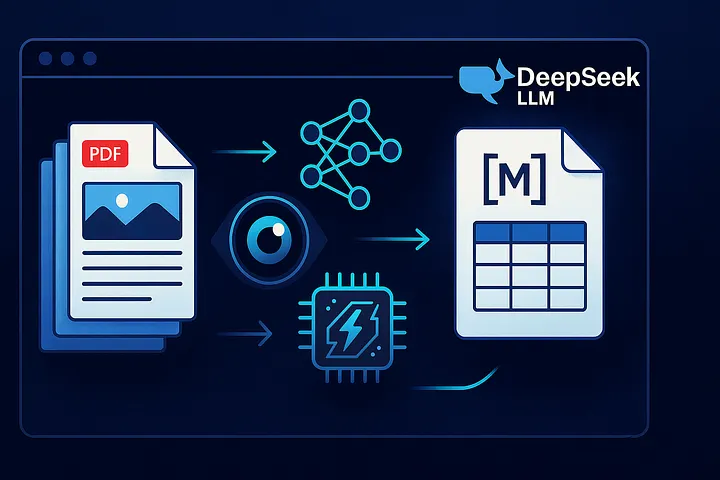

You know that feeling when a “simple OCR” job quietly turns into a week of hacks tables misread, headings mangled, everything off by a few pixels? Same. DeepSeek-OCR lands like a breath of fresh air because it doesn’t just “read pixels.” It compresses and understands documents as a vision-language task then emits clean text/Markdown you can actually use.

Non-member link.

What it is: a 3B-parameter vision-language OCR model from DeepSeek that turns images/PDF pages into structured Markdown. It’s designed for long, messy, real-world docs (tables, forms, screenshots).

What’s new/different: it uses “contexts optical compression”: representing text as high-res images so the model can handle much longer documents with fewer tokens (7-20x reduction reported).

Why you should care: faster/cheaper long-doc workflows, better tables, easier batch pipelines. vLLM support is already upstream for production-style serving.

The big idea

Traditional OCR → detect characters → assemble words → guess structure.

DeepSeek-OCR flips the lens: treat the whole page as a visual context, then generate the text/Markdown with layout awareness. The research calls this compressing long textual contexts via optical 2D mapping, reporting 7-20× token savings with strong fidelity. That’s why it scales to large PDFs without blowing up your token budget.

And it’s not vaporware here’s a real model card, a GitHub repo, and field reports of sub-second/page on high-end GPUs.

Flavors & presets

DeepSeek’s model card exposes simple inference presets via base_size, image_size, and crop_mode. Think of these like “modes.” Hugging Face

- Tiny →

base_size=512, image_size=512, crop_mode=False

When: speed over accuracy, quick skims, low-VRAM instances. - Small →

640, 640, no-crop

When: general pages without dense tables. - Base →

1024, 1024, no-crop

When: most PDFs/screenshots; good quality/speed balance. - Large →

1280, 1280, no-crop

When: design docs / diagrams / small fonts where details matter. - “Gundam” (their name!) →

base_size=1024, image_size=640, crop_mode=True

When: dense tables and cramped layouts—crop helps the model zoom into regions.

Pro tip: Start Base. If tables look iffy, try Gundam. If you’re tight on VRAM, drop to Small/Tiny.

CPU vs GPU: what actually works

- Model size: ~6-7 GB weights (bf16). Running purely on CPU is possible but typically too slow for production. A single modern NVIDIA GPU is the sweet spot. Simon Willison’s Weblog

- VRAM comfort zone: 8-12 GB is enough for the standard presets; more helps batch throughput. The model card shows 3B params and defaults to bf16; the examples target CUDA 11.8 + PyTorch 2.6.

- Throughput in the wild: community reports show <1s per page on an Ada A6000; and press coverage claims “hundreds of thousands of pages/day” on an A100 at scale (that’s a pipeline claim, but it signals real headroom). Always test on your content.

- Production tip: it’s now supported by vLLM (nightly at the time of writing) for efficient serving/batching. Use this when you’re moving beyond notebooks into microservices.

Where DeepSeek-OCR shines

- Complex tables → Markdown tables with fewer post-fixes.

- Screenshots/forms where layout matters to meaning.

- Long PDFs (policy docs, RFQs, catalogs) where token budgets normally explode.

Backed by the paper’s reported 7-20× token compression and visual-first decoding.

Colab: run your PDF end-to-end (upload → Markdown → ZIP download)

Below is a clean Colab cell sequence you can run. It matches the dependency versions from the model card and includes a fast path + retry for dense pages. Flash-Attention is optional; it’ll fall back automatically if unavailable.

What it does:

- installs deps (CUDA-compatible PyTorch, Transformers, pdf2image, poppler)

- lets you upload your PDF

- converts pages → PNGs

- loads

deepseek-ai/DeepSeek-OCR - infers each page to Markdown (with a retry mode for dense pages)

- zips all outputs (per-page MD + combined MD + timings.csv) for download

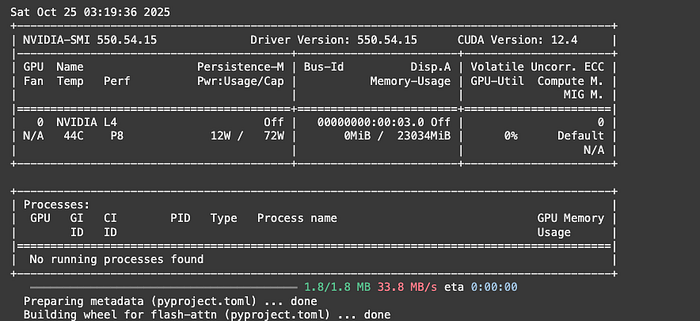

1) GPU + dependencies: why these exact versions?

!nvidia-smi

!pip -q install --upgrade pip

!pip -q install torch==2.6.0 torchvision==0.21.0 torchaudio==2.6.0 --index-url https://download.pytorch.org/whl/cu118

!pip -q install transformers==4.46.3 tokenizers==0.20.3 einops addict easydict

!pip -q install flash-attn==2.7.3 --no-build-isolation || echo "flash-attn not available; falling back to eager attention"

!apt-get -y update >/dev/null 2>&1 && apt-get -y install poppler-utils >/dev/null 2>&1

!pip -q install pdf2image pillow bs4 pandas tabulate

What’s happening:

!nvidia-smiconfirms a GPU is attached to your Colab runtime and shows driver/CUDA versions. If you don’t see an NVIDIA card, switch runtime → GPU.- PyTorch pins (

torch==2.6.0+cu118wheel) match CUDA 11.8. This avoids the classic “CUDA mismatch” crash. transformers+tokenizersload the DeepSeek-OCR model.einops,addict,easydictare light utilities some remote code uses.flash-attnaccelerates attention on supported GPUs. The|| echo ...part ensures your notebook doesn’t stop if FlashAttention can’t build; it’ll fall back later.poppler-utils+pdf2imageconvert PDF pages to PNGs. Without Poppler,pdf2imagecan’t do anything.

If things fail:

- FlashAttention often fails on older T4s or when Colab’s toolchain changes. That’s fine; your code already falls back.

- If PyTorch import says “CUDA not available,” your runtime is CPU-only. Re-select a GPU runtime.

Knobs you can turn:

- Newer GPUs sometimes prefer newest wheels. If Colab updates CUDA, switch to the matching

--index-urlwheel. - If you want CPU-only (debugging), install CPU wheels from the default PyPI and skip CUDA wheels (it’ll be slow).

2) Upload a PDF: guard rails

I uploaded “Attention Is All You Need” pdf.

from google.colab import files

up = files.upload()

assert len(up) == 1, "Upload exactly one PDF"

pdf_path = list(up.keys())[0]

print("PDF:", pdf_path)

Purpose: Colab form to select a local PDF. The assert is a simple invariant: exactly one file. If you want batch, remove the assert and loop over up.items().

Common gotcha: Wrong file type. Add a quick check:

assert pdf_path.lower().endswith(".pdf"), "Please upload a .pdf file"

3) PDF → images: DPI is your quality vs speed dial

from pdf2image import convert_from_path

from pathlib import Path

import os

out_dir = Path("dpsk_ocr_outputs")

img_dir = out_dir / "pages"

md_dir = out_dir / "markdown_pages"

os.makedirs(img_dir, exist_ok=True)

os.makedirs(md_dir, exist_ok=True)

IM_DPI = 180 # 160–200 is a good range

images = convert_from_path(pdf_path, dpi=IM_DPI, fmt="png",

output_folder=str(img_dir),

output_file="page",

paths_only=True)

images = sorted(images)

print(f"Extracted {len(images)} page image(s) → {img_dir}")

Why: DeepSeek-OCR consumes images, not PDFs. We pre-render each page.

DPI trade-off:

- Lower DPI (e.g., 150-180): faster, smaller VRAM, may miss tiny fonts.

- Higher DPI (e.g., 220-300): sharper tables/small text, more GPU memory and time.

Tip: If you’re OCR-ing scans with tiny text, try IM_DPI = 220. If you hit OOM, drop back.

4) Load DeepSeek-OCR: trust_remote_code & attention fallback

import torch, os

from transformers import AutoModel, AutoTokenizer, GenerationConfig

os.environ["CUDA_VISIBLE_DEVICES"] = "0"

torch.backends.cuda.matmul.allow_tf32 = True

try:

torch.set_float32_matmul_precision("high")

except Exception:

pass

model_id = "deepseek-ai/DeepSeek-OCR"

attn_impl = "flash_attention_2"

try:

import flash_attn # noqa

except Exception:

attn_impl = "eager"

tok = AutoTokenizer.from_pretrained(model_id, trust_remote_code=True)

if tok.pad_token is None and tok.eos_token is not None:

tok.pad_token = tok.eos_token

model = AutoModel.from_pretrained(

model_id,

trust_remote_code=True,

_attn_implementation=attn_impl,

torch_dtype=torch.bfloat16,

use_safetensors=True,

).to("cuda").eval()

Concepts to understand:

trust_remote_code=Truelets the model load custom Python from the repo (needed here becausemodel.infer(...)is custom). Security-wise, only enable this for repos you trust.- Attention impl: we prefer FlashAttention for speed, but we explicitly fall back to “eager” if import fails. That’s why your earlier install could “fail” and life still goes on.

bfloat16(bf16): halves memory vs fp32 with minimal quality loss, widely supported on modern NVIDIA. If your GPU complains, trytorch_dtype=torch.float16or as a last resortfloat32(slower, more VRAM).- TF32/better matmul:

allow_tf32andset_float32_matmul_precision("high")let NVIDIA use tensor cores for certain ops; a safe speed win on Ampere+.

Small safety switch:

device = "cuda" if torch.cuda.is_available() else "cpu"

model = AutoModel.from_pretrained(...).to(device).eval()

Running on CPU will be slow, but this keeps the notebook usable for readers without GPUs.

Generation config: why these values used?

# If model has no default config, set ours

if not hasattr(model, "generation_config") or model.generation_config is None:

model.generation_config = GenerationConfig()

gc = model.generation_config

gc.pad_token_id = tok.pad_token_id

gc.eos_token_id = tok.eos_token_id

gc.do_sample = False # deterministic

gc.num_beams = 1 # no beam search (faster)

gc.top_p = 1.0; gc.temperature = None

gc.max_new_tokens = 512 # cap runaway generations

gc.no_repeat_ngram_size = 6

gc.repetition_penalty = 1.15

gc.length_penalty = 0.9

model.generation_config = gc

- Deterministic decoding (

do_sample=False) keeps outputs stable across runs-which is perfect for OCR. max_new_tokens=512prevents one page from “running away.” If you see truncation, nudge to 768/1024-but watch latency.- Repetition guards (

no_repeat_ngram_size,repetition_penalty) reduce those weird duplicated lines LLMs sometimes emit.

5) Cleaning pass: regex to strip detection tags

import re

REF_DET_RE = re.compile(r"<\|(?:ref|det)\|>.*?<\|/(?:ref|det)\|>", re.DotAll)

def clean_ocr_markdown(s: str) -> str:

s = REF_DET_RE.sub("", s or "")

lines, out, last, run = s.splitlines(), [], None, 0

for line in lines:

if line == last:

run += 1

if run < 4:

out.append(line)

else:

last, run = line, 1

out.append(line)

return "\n".join(out).strip()

What it does:

- Strips internal tags like

<|ref|> ... <|/ref|>the model might add for detection metadata. - Collapses accidental duplicate lines (keep at most 3 consecutive copies).

Important fix: In Python, the flag is re.DOTALL (all caps). If you use re.DotAll, you’ll get an attribute error on some Pythons. Use:

re.compile(pattern, re.DOTALL)

Why line-collapse at 3? It’s a compromise: remove obvious repetition without killing legitimate lists.

6) Inference strategy: fast path, then a smarter retry

prompt = (

"<image>\n"

"<|grounding|>Convert the document to clean, concise Markdown.\n"

"- Use Markdown tables (not HTML) for tabular data.\n"

"- Do not include detection tags like <|ref|> or <|det|>.\n"

)

BASE_SIZE = 1024

Prompt design: Think like a product requirement:

- Be explicit about Markdown tables (no HTML).

- Ask to avoid detection tags so we don’t clean as much later.

Two-stage strategy:

def infer_once(img_path, image_size, crop_mode, save_results):

txt = model.infer(

tok,

prompt=prompt,

image_file=str(img_path),

output_path=str(md_dir),

base_size=BASE_SIZE, # global canvas scaling

image_size=image_size, # actual input resize

crop_mode=crop_mode, # enable region zoom for dense layouts

save_results=save_results,

test_compress=False,

eval_mode=True

)

return (txt or "").strip()

def infer_with_retry(img_path):

# 1) fast path: quick & cheap

t0 = time.time()

txt = infer_once(img_path, image_size=512, crop_mode=False, save_results=False)

if len(txt) >= 40: # crude signal that we got real text

return txt, time.time()-t0, "512/no-crop"

# 2) smarter path: zoom in & crop (great for tables/tiny fonts)

txt2 = infer_once(img_path, image_size=640, crop_mode=True, save_results=True)

if len(txt2) >= 40:

return txt2, time.time()-t0, "640/crop"

# 3) last resort: if the helper wrote result.mmd, use it

Why this works:

- Stage 1 is fast for normal pages.

- Stage 2 uses crop_mode (DeepSeek’s “Gundam” like behavior) to zoom into dense regions, very helpful for tables.

- We time each page and record which mode succeeded. That becomes a mini benchmark for your dataset.

Tip: If your PDFs are table-heavy, flip the order (try crop first).

Throughput notes:

- You’re doing one page at a time. For speed, you could batch across multiple GPUs or processes, but keep it simple in Colab.

7) Persisting results: per-page, combined, and a timings CSV

combined_md_path = out_dir / (Path(pdf_path).stem + "_combined.md")

timing_csv_path = out_dir / "timings.csv"

with open(combined_md_path, "w", encoding="utf-8") as mdout, \

open(timing_csv_path, "w", newline="", encoding="utf-8") as csvf:

writer = csv.writer(csvf)

writer.writerow(["page_index","image_name","seconds","mode","chars"])

for i, img_path in enumerate(images, 1):

txt, secs, mode = infer_with_retry(img_path)

cleaned = clean_ocr_markdown(txt)

# per-page MD

per_page_md = md_dir / f"page_{i:04d}.md"

with open(per_page_md, "w", encoding="utf-8") as f:

f.write((cleaned or "[EMPTY OCR OUTPUT]") + "\n")

# append to combined

mdout.write(f"\n\n<!-- Page {i} -->\n\n{cleaned or '[EMPTY OCR OUTPUT]'}\n")

# benchmarking breadcrumbs

writer.writerow([i, Path(img_path).name, f"{secs:.2f}", mode, len(cleaned)])

print(f"Page {i}/{len(images)} | {secs:.2f}s | mode={mode} | chars={len(cleaned)}")

Output:

Total time: 395.05 s for 11 pages

Per-page MDs: dpsk_ocr_outputs/markdown_pages

Combined MD: dpsk_ocr_outputs/NIPS-2017-attention-is-all-you-need-Paper_combined.md

Timings CSV: dpsk_ocr_outputs/timings.csv

Why this structure:

- Per-page

.mdlets you re-process only the pages you care about later. - One

combined.mdis perfect for downstream review or feeding into RAG chunking. timings.csvis gold for performance tuning. Sort by slow pages and inspect what’s special, often tiny fonts or dense tables.

8) Packaging outputs: one click download

import shutil

zip_path = shutil.make_archive("deepseek_ocr_outputs", "zip", root_dir=str(out_dir))

print("ZIP:", zip_path)

from google.colab import files

files.download(zip_path)

Why: Colab sandboxes vanish. Zipping everything means your readers can walk away with pages/, markdown_pages/, combined.md, and timings.csv in one go.

GitHub code link:

https://github.com/pillaiharish/LLM-AI-Agents-Learning-Journey/tree/main/notebooks/deepseek-ocr-run

Key concepts

- DPI controls input fidelity → affects accuracy and VRAM/time.

base_sizevsimage_size→ think “global canvas” vs “actual resize.”crop_mode=True→ instructs the model to examine sub-regions (great for tables).- bf16 → half memory, near-fp32 quality on modern NVIDIA. If unsupported, try fp16.

- Deterministic decoding → OCR is extraction, not creative writing; keep

do_sample=False. - Retry strategy → guardrails against tricky pages without manual babysitting.

trust_remote_code→ required formodel.infer(...), but only enable for trusted repos.

What to tweak when you hit friction?

- Tables broken? switch to Gundam (

crop_mode=True, image_size=640) or bumpimage_sizeto 1024 if VRAM allows. - Out of memory? lower

image_size(512/640), use Tiny/Small presets, or reduce batch size (process pages one by one). - Slow? ensure GPU runtime; try installing flash-attn (already in the cell). If you’re productionizing, move to vLLM to batch across requests.

flash_attnbuild fails → expected on some Colab GPUs. You already fall back; ignore the warning.- OOM (out of memory) → lower

IM_DPI, useimage_size=512, or disablecrop_mode. Worst case, switch to CPU (slow) to at least get something. - Regex error: use

re.DOTALL(notre.DotAll). - Weird repeated lines in Markdown → keep the duplicate-collapser, or tighten it (allow max 2 instead of 3).

- Empty pages → check if the PDF page is a vector page with tiny text; try

IM_DPI=220+ crop mode.

Comparing to usual stack

- Classic OCR (Tesseract/PaddleOCR) is great for “pure text” and small jobs. But for layout-heavy docs with tables, DeepSeek-OCR’s VLM approach + Markdown output often means less downstream cleanup.

- Managed services (Textract, Google Document AI) are convenient. If you need private infra, predictable costs, or specific Markdown/table control, this open stack can be more flexible especially with vLLM and batching.

Hardware notes

- Minimum viable dev: 8-12 GB VRAM NVIDIA GPU for single-page inference; expect seconds/page depending on preset/DPI. Community shows much faster on high-end Ada/A100.

- Serious batch: Use vLLM and a single A100/L40S/A6000-class card; scale by sharding PDFs across workers. HF card confirms official vLLM support.

Sources & further reading

- Model card (HF): usage, presets (Tiny/Small/Base/Large/Gundam), and vLLM support.

- Paper (arXiv): “DeepSeek-OCR: Contexts Optical Compression”: 7-20x token reduction.

- Release/GitHub: install + vLLM & Transformers examples.

- Field reports / news: throughput claims and context compression explainers.